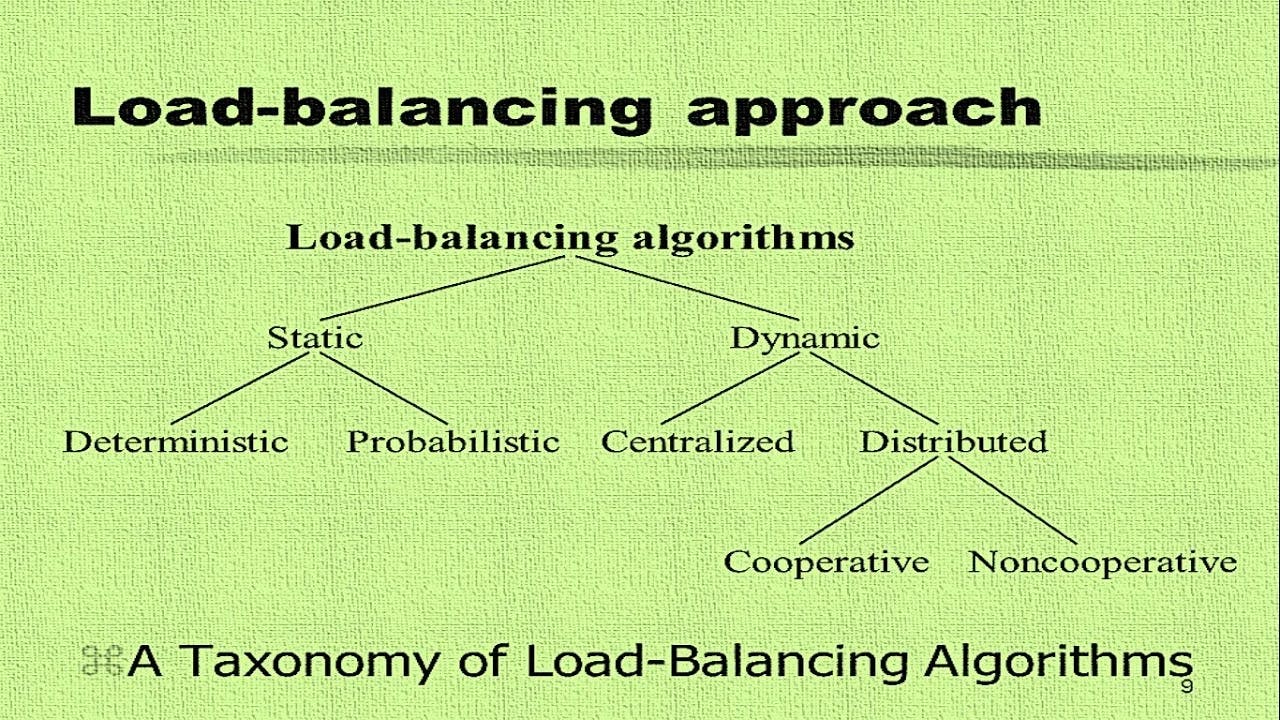

Load Balancing Algorithms

Load balancing algorithms are essential in distributed computing environments to distribute incoming network traffic or workload across multiple servers or resources. The primary goal is to optimize resource utilization, maximize throughput, minimize response time, and avoid overload on any individual resource.

Static

Round Robin: Each incoming request is assigned to the next server in line, cycling through the list of servers. It distributes the load equally among all servers. The Round Robin load balancing algorithm is a simple and widely used method for distributing incoming requests across a group of servers.

Sticky Round Robin: also known as Session Persistence, is a variation of the Round Robin load balancing algorithm.

Weighted Round Robin: Similar to Round Robin, but servers are assigned different weights based on their capacities. Servers with higher weights receive more requests than those with lower weights.

IP/URL Hash: A hash function is applied to the client's IP address to determine which server will handle the request. This ensures that requests from the same IP are consistently directed to the same server.

Dynamic

Least Connections: This strategy directs traffic to the server with the fewest active connections. It is useful when servers have varying capacities or loads. Use the Least Connections algorithm when you want to distribute incoming requests based on the current workload of servers, and when you have varying server capacities or a dynamic workload. However, be mindful of its limitations, particularly in scenarios where session persistence and server health monitoring are critical considerations.

Weighted Least Connections: Similar to Least Connections, but with the addition of server weights. Servers with higher weights are favored, but those with fewer active connections are still prioritized.

Least Response Time: The server with the least response time to the previous request is chosen for the next request. This helps in distributing load based on server performance. Least response time load balancing is suitable for applications where low latency is a priority, but careful consideration is needed to address the dynamic nature of response times and ensure effective server health monitoring.

Randomized Load Balancing: Requests are assigned randomly to servers. While simple, it may not be as effective as other algorithms in ensuring balanced loads.

Chained Failover: Servers are designated as primary and backup. If the primary server fails, the request is forwarded to the next server in line. This continues until an available server is found. Chained failover is a valuable strategy in situations where maintaining service availability is of utmost importance, and the trade-offs in terms of complexity and potential latency are acceptable given the critical nature of the application. Careful planning, testing, and monitoring are essential when implementing chained failover to ensure its effectiveness in real-world scenarios.

Least Bandwidth: This algorithm directs traffic to the server with the least amount of traffic (measured by bandwidth usage). It is beneficial when servers have different available bandwidths.

Dynamic Load Balancing: Continuous monitoring of server loads is performed, and the algorithm dynamically adjusts the distribution of incoming requests based on the current server loads.